Benchmarking JavaScript Loops and Methods - Part 2

Observing the performance of loops and methods when dealing with arrays of objects.

If you are starting with part 2, be sure to check out part 1 by clicking here!

Non-Primitive Values

In part 1, we took a look at how different loops and methods are used to find the index or value from an array of primitive values, and what the performance implications were when using each one of them. In part 2, we will do the same thing, but target non-primitive values. In particular, we will make use of the non-primitive: Object. Since most of a developer’s data handling revolves more frequently around arrays of objects served from an API or any given data source, this should be fairly relevant to those who want to measure performance of the loops and methods that JavaScript offers and how to pick which one to use in a given situation.

We’ll make use of the same loops and methods in part 1. These are:

Let’s start with defining how these loops and methods work with some starter code examples. We’ll begin with defining what a non-primitive array looks like and some starter code that we will use for each of the examples in our performance test. Once again, we will be overly verbose than “one liner” snippets of code in order to expand upon a few more options available when using these loops and methods. We’ll remove includes, lastIndexOf and indexOf from this list (which were used in part 1 for primitive values) since when used with objects in an array, it is usually combined with another method like map.

Note: Due to the simplicity of the examples, error handling and validation (which could be layered in) are not part of and are not relevant to this discussion currently.

Example of Non-Primitive Array Using Objects

let nonPrimitiveArray = [

{name: 'Alpha', letter: 'A'},

{name: 'Bravo', letter: 'B'},

{name: 'Charlie', letter: 'C'}

];

Starter Code

// Objectives:

// 1. Find the value Bravo

// 2. Find the index of 1

const OBJECTIVE_STRING = 'Bravo';

let arr = [

{name: 'Alpha', letter: 'A'},

{name: 'Bravo', letter: 'B'},

{name: 'Charlie', letter: 'C'},

{name: 'Delta', letter: 'D'},

{name: 'Echo', letter: 'E'},

{name: 'Foxtrot', letter: 'F'},

{name: 'Golf', letter: 'G'}

];

let foundObject = null;

let foundIndex = -1;

Example Loop

// Using array and variables from base code block above…

for (let index = 0; index < arr.length; index++) {

const value = arr[index];

if(value.name === OBJECTIVE_STRING){

foundObject = value;

foundIndex = index;

break;

}

};

console.log(foundObject);

// expected output: {name: ‘Bravo’, letter: ‘B’};

console.log(foundIndex);

// expected output: 1;

For a full list of the loops and methods referenced in this article, click here.

Benchmarking the Code

We now have the basis to benchmark the loops and methods created and can now properly assess how each performs under various array sizes containing objects. Once again, we’ll include map, filter and reduce. Filter is still an anti-pattern like map and reduce because we want to find the value or index instead of returning a new value from the array by manipulating or creating something from the original array. This doesn’t mean you can’t. This only means that we are using them against their generalized intended functionality to show how they perform.

Arrays we will be using:

-

Array 1: 100 non-primitive values;

-

Array 2: 1,000 non-primitive values;

-

Array 3: 10,000 non-primitive values;

Finalized Results

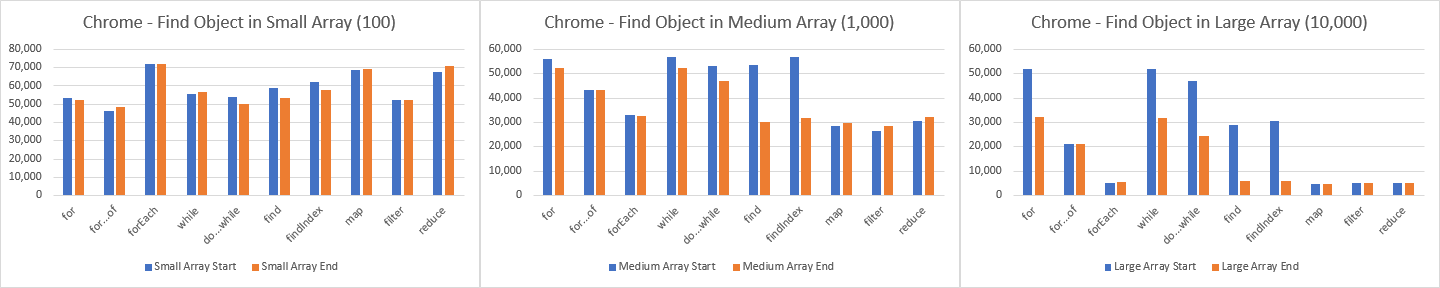

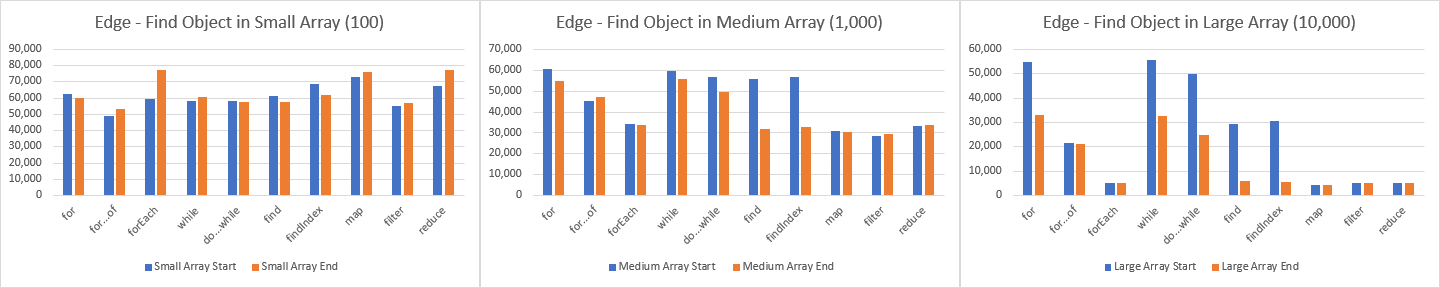

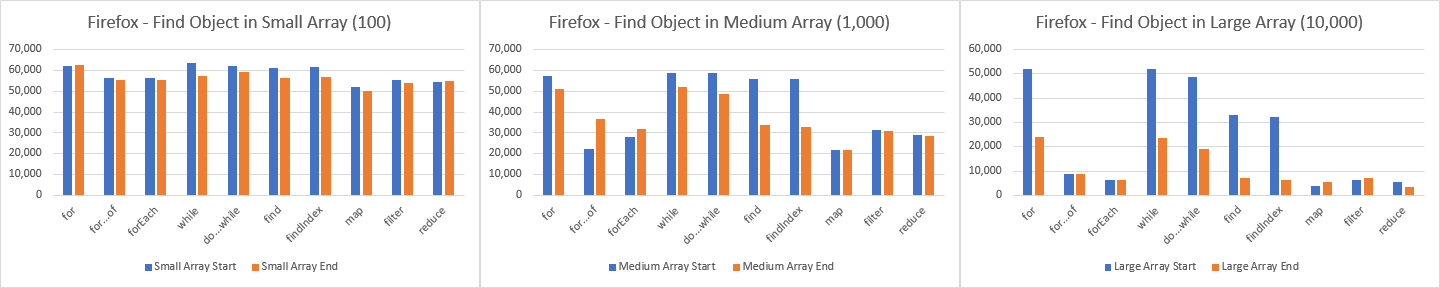

Please remember that results may differ between hardware and software that various devices come with. Take that into account when planning on developing your code base that may have to be run on a full range of devices that are both on the high end and low end of the spectrum of both quality and speed. The following graphs represent the operations per second (op/s) that each loop or method can run in a give timeframe. This means that they will loops over our various array sizes as many times as possible with the goal each time to find the non-primitive value defined in the tests.

Chrome

Edge

Firefox

What Does This Mean?

Now that we have the performance measurements for our browsers (Chrome, Firefox, and Edge), we can make some comparisons and conclusions. Let’s take a look at how each of their engines (Chakra, V8, and SpiderMonkey) are handling finding objects by their value within the near beginning and near end of an array.

Small Sized Arrays

Generally, the performance of all loops and methods across all browsers are high. Firefox, just like our part 1 primitive counterpart, achieves the highest performance in operations when dealing with small arrays.

-

Chrome:

forEach,map, andreduceperform quite well, far outpacing the remaining loops and methods. -

Edge: We see the same result here as we did with Chrome.

findIndexseems to be slightly more performant than the others as well, but the difference is too small to be very important. -

Firefox: It’s safe to say that the use of just about any loop or method when dealing ONLY with small arrays would be acceptable here.

-

Overall Performers:

forEach,map, andreduce

Medium Sized Arrays

Performance impacts are more greatly seen here than with primitive arrays and earlier too. We are starting to be able to make more educated decisions on the kind of array manipulation techniques to use for the client side code.

-

Chrome:

for,whileanddo…whileseparate themselves from everyone else fairly easily. At this point, most other loops and methods fail to perform at the same level. -

Edge: The same trend as Chrome is once again seen here.

for…ofis our only other alternative that has a somewhat positive performance. -

Firefox: The JavaScript engine, SpiderMonkey, follows the same optimization path as it’s competitors with

for,whileanddo…whileperforming the best as well. -

Overall Performers:

for,whileanddo…while

Large Sized Arrays

Looking at the graphs above, it’s safe to say that with all browsers, for, while and do…while are our top performers once again. Once our data sets start to get really large, for…of is the only other loop that performs decently while the rest of our loops and methods have a dramatic loss in performance.

- Overall Performers:

for,whileanddo…while

Conclusion

Just like part 1, it is interesting to see the effect of iterating over different sized data sets with the various loops and methods JavaScript provides us with. The performance changes dramatically as our data grows. This kind of information should play at least a small part in helping look for optimizations when dealing with large amounts of data in order to best plan for performance for all users and devices. I would encourage you to take a look at the user experience you are providing to users and determine if you can do better by them by improving the way you handle the data.